Extended Reality (XR) technologies have transformed how we experience and interact with the world, merging virtual environments with physical realities. However, one of the ongoing challenges for XR systems is enhancing the level of immersion experienced by users. Immersion, the sense of being enveloped in a digital environment, is key to making XR experiences more engaging and believable. The integration of multi-sensory media, such as sound, sight, touch, and even smell, has the potential to elevate these immersive experiences, creating a more lifelike and engaging reality for remote and in-person participants alike.

The HEAT project, funded by the European Union’s Horizon Europe program, aims to push the boundaries of multi-sensory integration in XR environments. Besides the focus on holographic real-time communications, the project focuses on the application of multi-sensory elements such as olfactory feedback, wind effects, haptics and spatial audio to create fully immersive experiences. One notable aspect of the HEAT project is its effort to integrate these sensory components into live performances, including theater, concerts, and other events, enhancing the interaction between performers and their audiences.

In this blog post, we’ll explore how the HEAT project is adding immersion to XR experiences, particularly through the integration of multi-sensory media, and how this approach offers more than just a visual or auditory experience.

The Role of Multi-sensory Media in XR

To fully appreciate the potential of multi-sensory media in XR, it’s important to understand its role in enhancing realism. Traditional XR experiences have primarily focused on visual and auditory stimuli, offering participants the chance to view virtual environments and hear soundtracks and dialogues. While these elements contribute significantly to the experience, they can only provide limited immersion. Realism in XR is significantly improved by the inclusion of additional sensory information, such as the sense of smell or the feeling of wind, which can make virtual experiences feel more real and tangible.

The integration of multi-sensory media in XR has a wide range of potential applications beyond live performances. For example, it can enhance virtual tourism, gaming, education, and remote working environments by offering a richer, more immersive experience. For instance, a virtual tour of a historical site could involve not just seeing and hearing the environment but also experiencing the smells of the surroundings, like fresh flowers or the sea breeze, providing a more realistic and engaging way to explore new places.

In education, multisensory learning environments can enhance the comprehension and retention of information. For example, a virtual chemistry class could trigger scents associated with certain chemical reactions, making the learning experience more memorable and tangible.

The incorporation of olfactory feedback is one of the innovations explored in the HEAT project. Smell is a powerful sense that has a unique ability to trigger memories and emotions. When integrated into XR, scents can transform the user experience, evoking emotional responses. In the context of live performances, specific scents and effects can be triggered by key moments in a song or scene in a play, further enhancing the mood and atmosphere of the event. Not only can these effects be experienced by those attending the physical event, but they can also be delivered to remote viewers through XR headsets and reconstructed holograms via point clouds.

For example, in the cases of a blues performance and a theatrical play, the HEAT project employs technologies like speech recognition and convolutional neural networks to identify important moments in the performance. When specific lines are sung, or certain scenes are highlighted, effects such as scents and wind can be activated. These sensory cues are synchronized with the live performances, providing remote users with a more immersive experience by engaging their sense of smell and touch, in addition to sight and sound.

The Technology Behind the HEAT Project

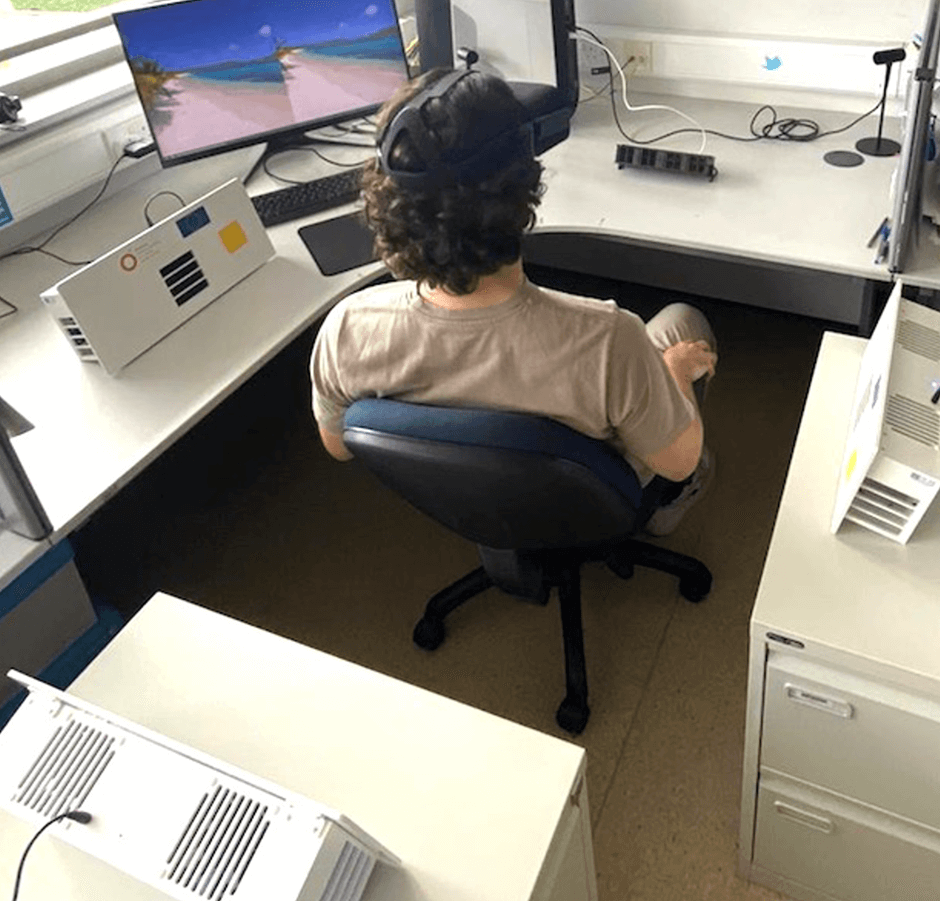

The HEAT project employs a range of key technologies to enable multi-sensory integration. In addition to VR headsets, these technologies include olfactory dispensers, fans, heaters, and haptic gloves and chairs. These devices feature various communication interfaces, such as SDKs, APIs, and both wired and wireless connections. Given the diversity of these devices, it is essential to use a unified platform that can seamlessly integrate them. Conducting participant experiments is important to ensure that the sensory effects are not only synchronized accurately but also comfortable and non-intrusive. Networking protocols such as MQTT are particularly useful in minimizing latency when activating remote devices, ensuring that the multi-sensory experience is responsive.

Challenges and Future Directions

Despite the many possibilities, the integration of multi-sensory media into XR is not without challenges. The need for low-latency communication, the synchronization of sensory stimuli, and the development of reliable and cost-effective sensory devices remain key issues. In particular, the integration of smell into XR experiences requires careful integration to ensure that the scents are released at the right time and with the appropriate intensity.

Looking to the future, the HEAT project is exploring ways to refine and expand its multi-sensory approach. This includes experimenting with delays, multiple scents, integrating new sensory effects such as temperature changes and haptic feedback, and improving the overall user experience. The project will also examine the feasibility of incorporating more advanced technologies, such as artificial scent detection mechanisms (e-noses), to further enhance the precision and automation of scent feedback.

References

J. Saint-Aubert, J. Manson, M. Cogné, and A. Lécuyer, “Multi-sensory display of self-avatar’s physiological state: Virtual breathing and heart beating can increase sensation of effort in VR,” IEEE Trans. Vis. Comput. Graphics, vol. 28, no. 11, pp. 3596–3606, Nov. 2022.

L. Jalal, R. Puddu, M. Martini, V. Popescu, and M. Murroni, “A QoE model for mulsemedia TV in a smart home environment,” IEEE Trans. Broadcast., vol. 69, no. 1, pp. 179–190, Mar. 2023.

G. Ghinea and O. Ademoye, “A user perspective of olfaction-enhanced mulsemedia,” in Proc. Int. Conf. Manag. Emergent Digit. EcoSystems, 2010, pp. 277–280.

J. P. Sexton, A. A. Simiscuka, K. McGuinness, and G.-M. Muntean, “Automatic CNN-based enhancement of 360◦ video experience with multisensorial effects,” IEEE Access, vol. 9, pp. 133156–133169, 2021

A. A. Simiscuka, D. A. Ghadge and G. -M. Muntean, “OmniScent: An Omnidirectional Olfaction-Enhanced Virtual Reality 360° Video Delivery Solution for Increasing Viewer Quality of Experience,” in IEEE Transactions on Broadcasting, vol. 69, no. 4, pp. 941-950, Dec. 2023